|

|

Gary Novak

The Cause of Ice Ages and Present Climate |

(Quasi Preface) At the engineering level, you simply try to find repeatable patterns which allow products to be reproduced. So physicists take that approach. It might be called super engineering; but it is not science. To make it science, the unknowns are filled in with contrivance. Over time, the corruption gets compounded until it becomes an alternative to real science. Real scientists do not go down the path climatologists follow pretending to measure complexities and randomness which cannot be measured. A measurement requires that all influences over the results be identified and separated from other influences. Climate has too many interacting complexities to do that. For these reasons, there is nothing resembling real science to the subject of global warming. Science is a process, not a conclusion. Conclusions come out of a dark pit in global warming science. Fake procedures are claimed, with no explanation or logical purpose. Necessary scientific standards are defied in extreme ways attempting to contrive a subject without accountability. Another major problem is the "signal to noise ratio." Minute effects are supposedly measured, while huge influences overwhelm the measurements.

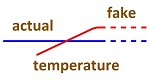

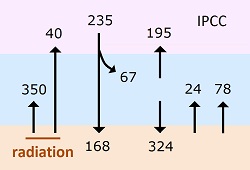

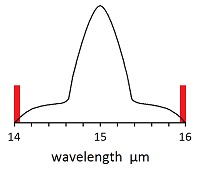

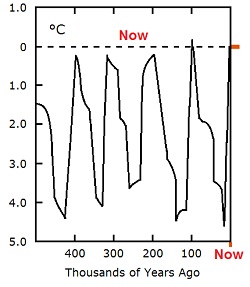

An example is the pretense of measuring an increase in ocean temperatures. Supposedly, the average ocean temperature increased 0.2°C over the past several decades. The heterogeneity of the oceans is too extreme and rapidly changing for the trivial measurements being made. A claimed point of measurement doesn't say whether it represents five feet of water of five hundred miles of water. The temperatures can vary by several degrees in a few feet. Physicists, including climatologists, have a bad habit of pretending to get a representative average out of several measurements, with no accounting for the unknowable variations. A large part of what they do is framed in statistical analysis; yet they don't bother with the statistical impossibility of getting a properly represented average out of unknown variations. Any temperature in the oceans could be due to any number of unknown effects other than humans putting carbon dioxide into the air. Evaporation removes much more heat from the oceans than the miniscule quantities supposedly being measured. The heat gets replaced by the sun and accumulates, which too occurs in huge quantities compared to the miniscule effects claimed for carbon dioxide influences in the air. The sun is said to add 168 watts per square meter to the earth including oceans, while the claimed temperature increase for the oceans due to carbon dioxide in the air is said to be added through 0.27 w/m² (published article). That's a noise to signal ratio of 622 to 1. Most of the sun's energy leaves through evaporation, but some stays in the ocean. How much is impossible to guess. Between ice ages, solar heat slowly accumulates in the oceans. The average increase is imperceptibly slow, while 168 w/m² from the sun is going into and out of the oceans constantly. The amount equilibrates, which means it stabilizes with all interacting forces. According to climatologists, there is a lot more radiation than 168 w/m² going into the oceans. They have huge amounts of radiation going into the oceans from the atmosphere and radiating back out. They use extreme amounts of radiation, because it is necessary for contriving a greenhouse effect. This image shows the numbers they use. They have an additional 324 w/m² radiating from atmosphere to earth including oceans (which are 71% of the surface area of the earth) and 390 w/m² radiating back out, with only 78 w/m² leaving by evaporation and 24 w/m² leaving by conduction. It's 0.05% of the energy staying in the oceans and adding heat, while 99.95% exits the oceans (0.27÷168+324=0.0005). That's a noise to signal ratio of 1,822 to one. In actuality, there is almost no radiation emitted by the cold, average surface temperature of the earth, said to be 15°C (59°F). I estimate that 1% of the energy leaving the surface of the earth is in the form of radiation, with the rest being conduction and evaporation. Climatologists don't determine the 0.27 w/m² in a direct manner; they claim to measure the temperature increase of the oceans and then calculate how much radiation would be required to produce that much heat when accumulating over 40 years. How come the 168 w/m² of energy which the sun puts into the oceans comes back out with no detectable amount staying in, while the 0.27 w/m² of energy which the humans put into the oceans stays there and accumulates for 40 years with not an iota coming back out? Logic is sacrificed to contrivance in Climatology. The primary absurdity is that determining an average temperature of the oceans, repeated over the past 40 years, is totally impossible. The oceans are extremely heterogeneous, with rivers of motion and mountains of hot and cold temperatures. A few years ago, a project called ARGO used 3,000 diving buoys to measure temperatures over the top 700 meters of the oceans. That's 351 kilometers of space between each one on average (3.7x108÷3,000=123,000km², x½=351km). There is an infinite amount of variation every 351 km in the oceans, and it changes constantly. The mentality throughout climatology and physics is that if you collect enough nonsensical data points for any question they will average out to a representative average. The implication of that assumption is that infinite measurements are made over the domain in question. Yet physicists/climatologists can only measure miniscule fractions of the variations that occur. The error is in assuming that a miniscule number of measurements will produce the same average as an infinite number of measurements. Climatologists get a huge amount of random error in their miniscule measurements—so much so that they can't get anything close to an actual number for temperature of the oceans, and hence for global average surface temperatures. The claimed measurements are contrived by starting at desired end points and faking a method of getting there. Science requires standards for these reasons. Standards are not arbitrary in science. They are needed to overcome corruption of the process. It's not just a question of precision or convenience; it's a question of verifying with reliability. Science has the purpose of verifying. If it doesn't verify, it's not science. The main problem is that procedures are not explained. Scientific criticism is blocked by that standard. Blocking criticism and accountability is not an alternative in science, it is a total absence of science. In place of methodology, a few mockeries are splattered onto the page, such as a few simple math equations. They tell nothing. Methodology has to explain why and how in terms that can be checked out. It doesn't exist in physics or climatology. The pretense is that everything is too complex, as if an encyclopedia would be required. Bull roar. If it isn't described, it isn't science. Anything that is actually done can be described. Researching the complexities that are claimed would require hundreds of laboratories hundreds of years, and then most of the subject could not be reduced to analysis due to randomness and complexities. But each step of progress with real science would be explained with real methodology. Real scientists do so; only fakes refuse to. One of the absurdities is that endless complexities can supposedly be taken care of in one study. Every modeling study since the mid seventies supposedly accounted for every detail of climate including radiative transfer, primary effect, secondary effects, weather, temperature, atmospheric circulations, reflections and absorptions, ocean heat and currents, clouds, aerosols, pollution, etc. Real science would requiring breaking those subjects down into a million studies. One of the false standards of physics is to pretend that complexities can be reduced to an average which represents net effect. Averaging replaces the scientific studies that would be needed for complexities. Averaging is not a substitute for measurement. The accuracy cannot be determined, and complexities do not reduce to an average. The average motion of a meat grinder is zero, while the non-average motion grinds the meat. Of course, you can define average however you want, but the vagueness shows what the problem is, not what the answer is. Therefore, critics have to cover all possibilities and argue against hypothetical procedures. This set of circumstances totally nullifies the science of the subject, not just because of technicalities, but because no real scientists would omit the necessary explanations. Only frauds would present a subject in such a manner. If the frauds had one iota of evidence to support their claims, it would be on the billboards. The complete absence of explanations is due to a complete absence of science in the claims. Digging down through the central core of the subject looks like this: The simplest measurements have shown for more than a century that saturation totally prevents a temperature increase due to greenhouse gases including carbon dioxide. To get a result in spite of this fact, calculations were made to show an increase with no explanation of how saturation was overcome. The calculations are called radiative transfer equations. The atmosphere is split into numerous small slices, and the radiation coming from and going into each slice is calculated. Coming out the top of the atmosphere is the radiation which supposedly creates global warming. Saturation allows no radiation in question to travel from bottom to top of the atmosphere. So how does it get there? The radiative transfer equations remove the effects of saturation. It's not possible to remove saturation through a mathematical procedure. If real science were involved, critics could show where the error in the math is located. But there is no real science to evaluate. The world's largest computers were used for the calculations, and of course nothing about the process was published, only the magical endpoint. The effects of saturation can be measured in a few minutes in a laboratory. So why do an extremely complex calculation to replace the measurement? Obfuscation is the only reason. The differences are more than immense: A total blocking of radiation is replaced by almost no blocking. Laboratory measurements show saturation to occur in 10 meters at ground level. Such measurements are highly reliable, as there are no major complexities over such a short distance. But the calculations which replaced measurements must account for everything in the atmosphere, because they show most radiation in question going through the entire atmosphere. The complexities cannot be accounted for. There are no theories or studies for most of the complexities. Radiation travels in all directions from infinite sources. Much gets absorbed into the oceans (70% of the earth’s surface), which are too complex to evaluate. The amount of time between absorption and re-emission of radiation determines the resulting temperature, and it cannot be evaluated due to infinite complexities. No radiation or heat on planet earth has an identifiable or quantifiable starting piont other than the total entering from the sun. Implicitly, the radiation at the starting point is that which is emitted from the surface of the earth. No one has a clue as to what that quantity would be. So why not just use the laboratory measurement for saturation instead of replacing it with calculations? Because the direct measurement eliminates the entire subject of global warming due to greenhouse gases. The calculations slice the atmosphere into small pieces starting at ground level. The sometimes explanation is that radiation near the top of the normal atmosphere (troposphere) goes into space without saturation, and it creates the global warming. If so, there is no relevance to doing radiative transfer equations to track radiation through the lower atmosphere. The place where the global warming supposedly occurs is usually said to be 9 kilometers up, while the top of the troposphere varies from 12-15 km up. The atmospheric pressure is about one tenth at the top compared to sea level. Saturation occurs in 10 meters at sea level, which means it occurs in 100 meters at one tenth as much pressure at the top of the troposphere. At 9 km up the distance for saturation would be 70 meters. Those short distances are all functionally equivalents, as convection stirs air over such short distances. It means saturation is almost the same where the supposed global warming occurs. An old argument is that the shoulders of the absorption peaks are not saturated, but that argument is too easy to criticize and is not seen now days. If nonsaturated shoulders are 5%, there are 50,000 air molecules around each CO2 molecule which supposedly does the heating. The heating is a continuous rate, which means each CO2 molecule doing the heating must have 50,000 times as much heat moving through it as goes into the surrounding air molecules. The temperature ratios would be approximately the same, meaning the CO2 molecules would have to heat up to 50,000°C to heat the surrounding air molecules to 1°C, when shoulders at 5% are doing the heating. It isn't possible. If nonsaturation requires something less than 5% shoulder molecules, each molecule gets thinner and must be hotter to conduct heat to surrounding air molecules. When the radiation is so weak that it gets totally absorbed in 10 meters at the center of the absorption peak, it can't heat anything to 50,000°C.

The main cause of secondary effects is said to be an increase in water vapor in the air, which is a stronger greenhouse gas than CO2. Water vapor saturates more easily than CO2, so it does nothing even more thoroughly. One of the errors is in claiming the atmosphere heats the oceans causing the secondary effect. The atmosphere has no significant ability to influence ocean temperatures, because there is very little heat capacity in air. The oceans have 1,000 times as much heat capacity as the atmosphere, because mass (weight) is the primary factor determining heat capacity. It means humidity could not increase significantly with a slight increase in atmospheric temperature, because virtually no increase in ocean temperature would result. Climatologists also assume that warmer air will acquire more absolute humidity due to its increase in holding capacity. But the temperature of the air over the oceans will equilibrate at ocean temperature and not significantly increase in holding capacity while in contact with the surface of the oceans. More relevant is the constant changing of ocean surface temperature for reasons unknown which are related to circulation in the oceans. The oceans are extremely heterogeneous in temperature consisting of mountains and rivers of variation. Since the oceans have much more heat capacity than the atmosphere, it will be the the oceans which influence the atmosphere, not the atmosphere which influences the oceans. For this reason, it is totally absurd to hear the constant reference in the media of increasing ocean temperatures causing endless weather effects including hurricanes and bleaching of corals. Oceans heat up in a slow and continuous manner between ice ages, because the sun's energy penetrates to 10 meters causing heat to accumulate. Only ice thawing after an ice age allows the oceans to cool back down. Climatologists supposedly calculate how much heat goes from atmosphere to the surface based upon the claim of the fudge factor indicating that 3.7 watts per square meter are heating the planet due to CO2 in the atmosphere resulting from the primary effect, and twice that much due to secondary effects. If such radiation actually existed, it would disappear in the oceans and show no significant temperature increase over that 70% of the earth's surface. But the fake primary effect could not heat the surface of the earth, even if it existed, because the mechanism would be occurring (obstructing radiation) at the top of the atmosphere, and there is no way to get the heat back down to the surface. The pretense is that it all occurs automatically. It couldn't happen. To radiate energy downward would require 24°C increase up high to create 1°C increase near the surface. With the assumed obstruction occurring at the top of the atmosphere and no way to get the heat down to the surface, no secondary effects could occur for that reason alone, besides the whole concept of secondary effects being ridiculous. There is another effect. Radiation being re-emitted after being absorbed by a greenhouse gas is similar to conduction in transferring heat from more concentrated areas to less. This movement of energy is the basis for the radiative transfer equations. One of the major problems is no concept for a time factor in transferring energy this way. The time will determine the temperature change. If for example a metal rod is heated at one end, its temperature will be determined by how fast heat moves through the metal. This movement of heat is totally beyond evaluation for the atmosphere. If heat is blocked from escaping from the end, supposedly, more heat will accumulate at the beginning. This logic applies more to a water pipe than a heated rod, but this logic is supposedly how blockage at the top of the atmosphere heats the near surface atmosphere. To get this effect, all heat escaping from the side of the rod, or throughout the atmosphere, must be ignored. It certainly cannot be quantitated. And then the amount going directly around will be instantaneous, which is either 15 or 30%. These are all error factors in determining the primary effect of a supposed 1°C near-surface temperature increase upon doubling the amount of CO2 in the air, while the end result is claimed to be somewhere around 1% error. None of these factors can actually be quantitated through calculation or measurement. If these explanations are not correct, what is? Science must answer such questions, or it isn't science. What we see on the internet is “debunking” of all criticism. Debunking is a subjective rant with no explanations. It is not called criticism, because social standards require more objective reality and explanation with criticism. Saturation Contradictions If saturation does not occur in 10 meters for CO2, why isn't the real distance being stated and evaluated? It would have been measured endlessly. Going around it with calculations means the fakes didn't like the results. The thing that really condemns them is that it is not possible to do the calculations which they claim. Even if they could calculate the radiation, they cannot convert it into a temperature, as explained on this temperature page. It means they created calculations which they could not use for the sole purpose of erasing saturation, because it could not be erased with direct measurements. Primary Effect Contradictions The methodology evolved into primary and secondary effects being separated. In nature, the two effects would not separate. Each molecule of CO2 being heated would be the primary effect for everything that followed. But studying what nature does is way too complex. There had to be a concept of heat being created followed by modeling of complexities as secondary (feedback) effects. This approach is what physics evolved into over the past 150 years—combine a whole bunch of complexity into a single number, equation or constant in order to reduce complexity to mathematics. Averaging is used extensively in climatology for this reason. So instead of looking at each molecule of CO2, an average heat produced by CO2 was viewed as a primary effect (called forcing). This number could be fed into models as heat for a starting point in evaluating infinite complexities as secondary effects. Like everything else in climatology, it is not possible to actually determine a primary effect. It is calculated with the ultra simplistic fudge factor combined with another ultra simplistic conversion factor to get a temperature of 1°C as the primary effect upon doubling CO2 in the atmosphere. There isn't anything close to a methodology for deriving the 1°C, and no source for a derivation can be located. Yet it is called an unquestionable law of physics which is not studied further; only secondary effects are studied.

A lack of common sense in the assumptions and approach to global warming point to ulterior motives. If water vapor is a much stronger greenhouse gas than carbon dioxide, why is there no noticeable difference in temperature between humid and dry air? Why doesn't water vapor pass the tipping point in a thousand places every day? Water vapor varies between zero and three percent all the time, while CO2 is only 0.04%. It's not credible to assume that such an extremely weak element of the atmosphere could produce significant heat. With CO2 at the present level of 400 parts per million, there are 2,500 air molecules around each CO2 molecule. The IPCC admits that the central molecules of CO2 in the atmosphere are saturated, while shoulder molecules do the heating. If shoulder molecules are 5%, there are 50,000 air molecules around each of these. If one person took a hand warmer into a stadium of 50,000 persons, how hot would the hand warmer have to be to heat the stadium 1°C? The fake research is just as condemning. With the IPCC admitting that the central CO2 molecules are saturated, why isn't the saturation studied to no end? It can be done in minutes and costs next to nothing? The evasiveness indicates ulterior motives. Rip off is the primary motive in science for contriving global warming. The government spends billions of dollars on fake global warming research, which brings incompetent scientists to the surface, while organizations which get pennies from the energy companies are not allowed to criticize the subject. Which of these fixers stopped driving automobiles? They now want to increase the cost of energy to pay for their contrivances while preventing critics from having anything to say about it including firing scientists and preventing them from getting grants or publishing for criticizing.

Here's A Four Point List More Concise The fakery of global warming science is poured in the concrete assumption that critics can't prove what was done because of the complexities; and it is too expensive for them to do experiments. As explained above, such obfuscation only removes the subject from science. But there is the underlying assumption of corrupters of everything that they are so right that a little lying is better than nothing. The three points below show that global warming cannot occur and the claims have no knowledge behind them. Point one: The planet is cooled by the 30% of infrared black body radiation which goes around greenhouse gases and into space and establishes equilibrium with the sun's energy coming in. I think the amount is 50%, because most leaves from the atmosphere, where separation is greater between absorption bands. Wikipedia says 15 to 30% of the infrared radiation goes around greenhouse gases. Without equilibrium, the 100% uncertainty would be an error of 100%. But everyone admits equilibrium occurs, which means infinite error. With equilibrium, no other source of cooling is needed, and no subject of global warming by greenhouse gases exists with heat escaping this way.

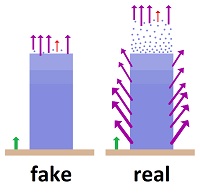

But the radiation gate is not just half open; it's 99.99% open. Block a little radiation, and more goes around. It's like holding a stick in a river to stop the flow—the effect of greenhouse gases. While the sun's energy mostly strikes the surface, the leaving energy is spread through the entire atmosphere. It's overkill for cooling power, which is why the atmosphere gets so cold so fast and why greenhouse gases are irrelevant, among other reasons such as saturation. If there were a limiting factor to equilibrium, how the adjustments occur cannot be determined in any way, shape or form, which means there is no science to the subject. Climatologists claim there is a shift in equilibrium temperature due to restrictions at the top of the troposphere, but the claim is absurd for many reasons. One is that most of the cooling occurs throughout the atmosphere and very little from the top. They are ignoring the radiation escaping from the rest of the atmosphere. Point Two: Temperatures cannot be calculated, they can only be measured, which eliminates all claims of global warming science. Heat dissipates, as indicated by the second law of thermodynamics, which means temperatures are constantly changing. No calculation will show how the changes are occurring beyond pure materials insulated from surrounding influences. Physicists pretend to have shortcut methods of getting around this problem, but the logic is not valid. The Stefan-Boltzmann constant (SBC) supposedly shows how temperature and radiation interchange. The impossibility of actually determining these quantities is shown by the fact that the SBC is obviously off by a factor of at least 20 for normal temperatures. Even then, the SBC is misapplied in using it to determine temperatures in the atmosphere, because it is designed for opaque solids, where radiation does not escape except from the surface, while the atmosphere, being a transparent gas, allows radiation to escape throughout. The SBC is also misapplied in claiming to show the total atmospheric temperature increase due to a claimed (fake) reduction in radiation being emitted into space. This quantity is the fudge factor used for the primary effect by carbon dioxide. Using the SBC for this purpose is not appropriate, because the reduction in radiation which supposedly creates global warming does not apply to a surface but to the atmosphere. For global warming, a correlation is made between radiation and temperature, as the SBC does, but the SBC is not just any old correlation; it is specifically the relationship between emissions from a surface at a certain temperature. No such surface is evaluated in applying the SBC (in reverse) to the claimed primary temperature increase caused by CO2 in the atmosphere. Borrowing correlations is standard operating procedure in physics, but doing so is not valid science, as it does not account for the specific details involved. A major point ignored in the misapplication of the SBC to get temperature out of radiation is in the meaning of watts per square meters. With a forward direction, both the temperature and radiation are on the surface of the emitting solid. The surface creates a meaning for square meters. But climatologists reverse the relationships and use the SBC to represent the heat increase in the atmosphere, where quantities cannot be defined. What square meters are involved? Is the entire surface considered? Nothing is considered. The relationship between temperature and radiation is simply correlated with reverse concepts going from radiation to temperature instead of temperature to radiation. Here's one of the problems: If the square meters are on the surface of the earth, the resulting temperature depends upon infinite factors, such as how much depth of the solid is heated. If a millimeter of depth heats 1°C, ten millimeters will heat 0.1°C. No one can say what temperature will result from absorption of 3.7 w/m². It is highly variable, particularly since 70% of the earth's surface is oceans which absorb to much depth and produce no significant temperature increase for such miniscule amounts of radiation. Ignoring these influences in making reverse correlations is absurd. Point Three: The CO2 concentration is 400 parts per million in the atmosphere, which means 2,500 air molecules around each CO2 molecule. Heating one molecule in 2,500 has no effect upon air temperatures. Each CO2 molecule would have to be 2,500°C to heat the air 1°C. And then, only more dilute CO2 molecules on the shoulders of the absorption peaks are said to be unsaturated for creating global warming, which reduces the concentration to somewhere between 5% and 0.1% of the CO2. This means one CO2 molecule in 50,000 or one in 2.5 million, which means heating to 50,000°C or 2.5x106°C. The acting CO2 molecules cannot be heated to such temperatures. The radiation involved is extremely weak. The global, average, atmospheric temperature of concern is said to be 15°C (59°F), which is equivalent to a cold basement. No significant radiation is given off at that temperature. And then only 8% of the infrared radiation is said to be absorbed by CO2. Climatologist lie in an extreme way in attempting to jack up the amount of radiation involved. When the SBC was applied to the analysis in the energy balancing model of Kiehl and Trenberth (published in the IPCC reports), they showed 79% of the energy leaving the surface of the earth as radiation. White hot metals would not produce that much radiation in an air environment. Later, the NASA version of energy balance for the planet showed 41% of the energy as radiation, presumably trying to improve the credibility. But again, white hot metals would not emit that much radiation. An incandescent light bulb only emits about 5% of its energy as radiation. Climatologists can't agree whether it is 79% or 41% radiation leaving the surface of the earth, while they pretend to have radiation leaving from the top of the atmosphere down to 1 or 2% error through radiative transfer equations, which require the world's largest computers to evaluate. Nothing could be more fake in science but relativity. The Physics ProblemThe problem with global warming science is the problem with physics. Physics is not studied the way science is studied. Real science must have a logic which explains relationships; physics does not. Math language replaces logic in physics. Math language cannot be real, because the human ability to produce math is too limited to contain logic in the complexities of nature. Yet in physics, the math language is totally unlimited. The language of physics math is unlimited because it is not stabilized—it is malleable, which means it is changed for every purpose. This malleability, combined with unaccountability, makes physics math language nonfalsifiable as well as unlimited. It is more than an analogy to say physics math is a language. It is entirely a language, but unlike normal languages, the dictionary defining the meaning of the words is not stable; the meanings change for every purpose. An example is the photon. It supposedly defines a particle of radiation. Radiation cannot be a particle, but photon math says it is. This result is due to ultra reductionism. In other words, the photon particle encompasses huge amounts of complexity which are reduced to ultra simple mathematical expressions. An analogy to photos would be to say that the animals in Africa are globules (not knowing what an animal is). These globules take up one cubic meter of space and consume food. Such globules do not tell the difference between monkeys and elephants. The one cubic meter of space is too large for a monkey and too small for an elephant. But a globule can be represented with a mathematical constant, such as Plank's constant, while the biology of Africa cannot. The photon concept has this problem. Everyone knows the energy in light depends upon its intensity. The intensity depends upon the temperature of the source. Yet there is no place in the photon concept for variations in intensity or temperature of the source. Crude elements of these questions are taken up with the Stefan-Boltzmann constant, but it is a crude and defective macro-analysis, where photons are a micro-analysis, and they leave out too much in the area of intensity and temperature.

|

There is no relevance to making such calculations, as no significant radiation goes from ground to top of the atmosphere for the radiation of concern. Most radiation goes into space from all points in the atmosphere, and very little from the top.

There is no relevance to making such calculations, as no significant radiation goes from ground to top of the atmosphere for the radiation of concern. Most radiation goes into space from all points in the atmosphere, and very little from the top. As recently as 5-10 years ago, a common claim was that 5% of the primary absorption peak was not saturated. One problem with that claim is that increasing the distances by a factor of twenty still results in saturation over moderate distances. Twenty times 10 leaves 200 meters at ground level, and 20 times 70 leaves saturation at 1400 meters at 9 km up.

As recently as 5-10 years ago, a common claim was that 5% of the primary absorption peak was not saturated. One problem with that claim is that increasing the distances by a factor of twenty still results in saturation over moderate distances. Twenty times 10 leaves 200 meters at ground level, and 20 times 70 leaves saturation at 1400 meters at 9 km up.

Even if there were a primary effect, the concept of secondary effects is absurd. An output increasing the input which causes it is called hysteresis. It causes a rapid and repeated compounding of effects. For temperature increase, it's called thermal runaway, which is a problem in electronics. If it were possible with the atmosphere, everything would have been fried by now, as real temperatures constantly vary.

Even if there were a primary effect, the concept of secondary effects is absurd. An output increasing the input which causes it is called hysteresis. It causes a rapid and repeated compounding of effects. For temperature increase, it's called thermal runaway, which is a problem in electronics. If it were possible with the atmosphere, everything would have been fried by now, as real temperatures constantly vary. How equilibrium is achieved cannot be determined, because it involves adjustments by every factor involved.

How equilibrium is achieved cannot be determined, because it involves adjustments by every factor involved.